So there’s this thing called “Benford’s Law” – and I think that it might be super useful in determining when people aren’t completely honest in survey research.

I’m going to freely admit that I’m operating at the limit of my mathematical understanding, and that I may inadvertently be wandering into New Age Bullshit Generator territory… but I don’t have the skills to know. Let me sketch this idea out first.

So I’m watching Latif Nasser’s Connected on Netflix (highly recommended, by the way), and when I got to episode four, I spent most of it with my head tilted like an inquisitive dog. That episode is all about Benford’s Law.

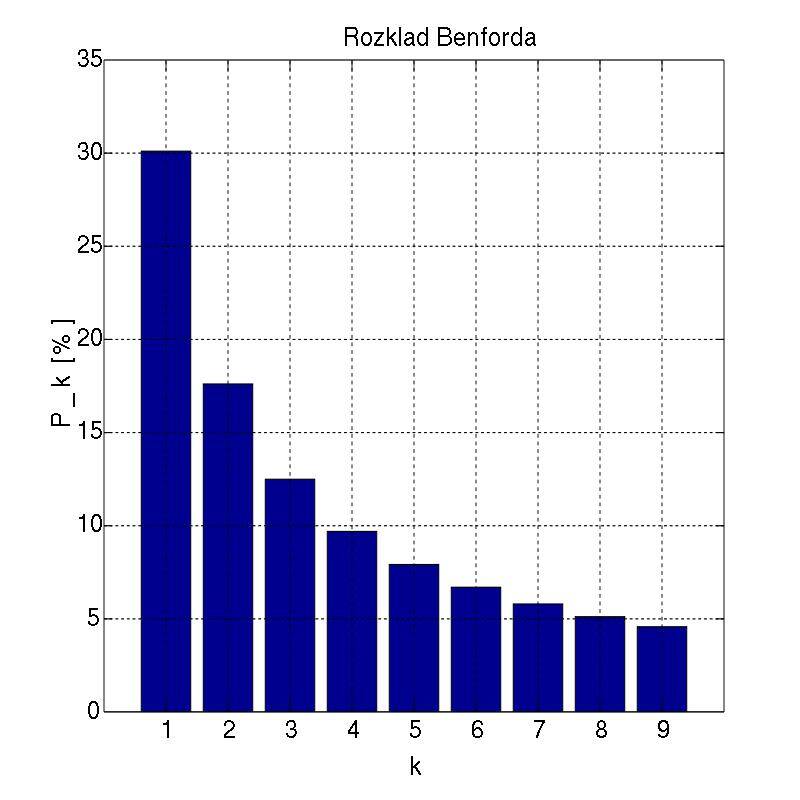

It’s one of those deeply weird things that happens in statistics. To summarize, in a set of numbers you’ll end up finding that the first digit is more likely to be a “1” than any other number. A lot more likely. You’re more likely to have a “2” than a “3” and so on.

This is regularly used in the world of finance to discover fraud, and folks have tried to use it – with varying degrees of success – to analyze for voter fraud.

One of the things that seems to throw this analysis off is strategic voting. [S]trategic voting considerations, rather than sincere voting, lead voters vote for a party or candidate other than their first preference in order to influence the outcome [1]. But that doesn’t mean fraud – it can apply in cases when one can split their vote between candidate and party (as in some areas of Germany), or perhaps even if someone is voting for the candidate they think will win instead of the candidate they want.

Which is weird, right?

So I wondered if Benford’s Law (or similar tests) were used with survey data. After all, we’re pretty sure that people respond differently to surveys depending on the social desirability of the response… which sounds a lot like the idea of strategic voting.

Take income data. One paper found the “Benfordness” of reported household income was spot-on… but that reported individual income did not, indicating that something was fishy. They suggested that individual income was a more sensitive subject and more likely to be misreported.

[O]ur analysis of harmonized data sets suggests that it is less a matter of origin and preparation than the framing of the question that determines the degree of reliability. In particular, respondents tend to make less reliable statements about their individual income income than about household income.

Benford’s Law As an Indicator of Survey Reliability—Can We Trust Our Data?

I think that author was so close to something. While they were focused on whether or not the set was reliable, I’m interested in testing for that “strategic” quality… and then maybe isolating it out somehow. I’m not so much interested in fraud (in social science research), but for instances where our survey research is either measuring the wrong thing – or people aren’t giving their most honest answers. Hell, it might be super useful in looking at the polls being gathered about the election now.

Again, I’m operating at the limit of my (current) math skills here. I’m hoping that someone reading this either knows enough statistics and math to tell me that this idea is completely woo-woo, that someone else has already done it and I’m not aware of it, or… well, or that it’s something useful.

More research, as they say, is necessary.

In the meantime, go watch Connected.

Featured Photo by Jeswin Thomas on Unsplash

[1] Searching for electoral irregularities in an established democracy: Applying Benford’s Law tests to Bundestag elections in Unified Germany

[2] Benford’s Law As an Indicator of Survey Reliability—Can We Trust Our Data?