Science reporting (and research) had its flaws prior to the current ideological imposition being put on it; these four articles not only illustrate the problems, but give you some good ideas of what to watch out for.

Cause And Effect

When there’s breathless coverage about the dangers of something — particularly something that isn’t part of “polite” society — then you have to be particularly concerned about any implications about causation. The most recent is research out of Canada, and the reporting sure makes it seem like ingesting THC could lead to schizophrenia… but they quite likely have the causality of the thing backward.

The study itself notes at the end of the abstract that after weed legalization in Canada, the rates of schizophrenia remained stable. Instead, it’s saying that the percentage of people who developed schizophrenia and used marijuana to the extent that it counted as a “disorder” increased.

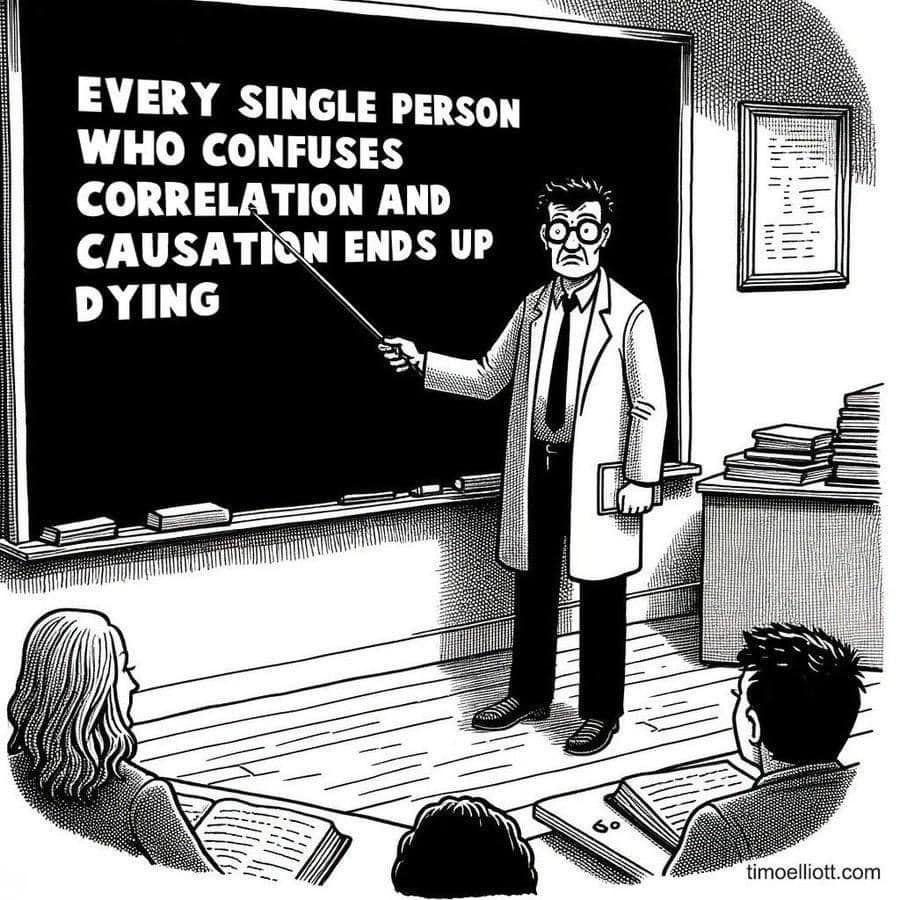

The most likely hypothesis? More people who were suffering from schizophrenia that was not yet addressed by the healthcare system self medicated in an attempt to control their own mental health. Self-medication — whether you’re using pot, booze, carbs, or something else — is not a good thing. But there is a huge difference between that and implying that THC use leads to an increased risk of developing schizophrenia. The two may be correlated, but that is very different than causation.

This is not just a bit of pedantry; the implications have real-world effects. To me, this study seems to indicate that the best intervention would outreach and increased availability of psychiatric services to those self-medicating.

Morning Happiness

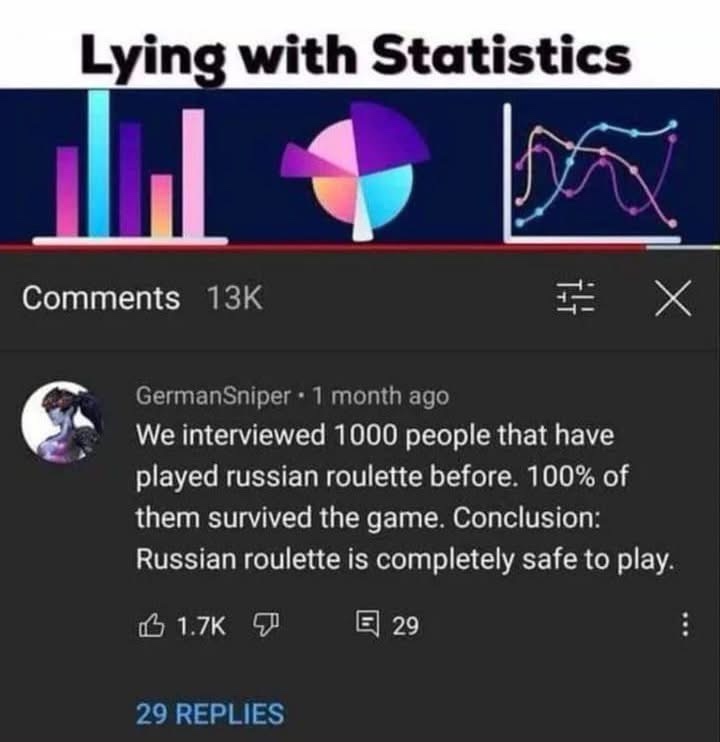

Sample bias is still a real thing, as seen in this study that claims that “everyone is happiest in the morning.” A lot of psychological and sociological research already suffers from sample bias — including my own — because it uses a sample of convenience, often college students.

That problem is intensified when you realize that most studies of the general population do not take neurodivergence into account.

Neurodivergence — including, but not limited to, autism and ADHD — is frequently viewed through a “disease model,” rather than as a difference. It’s somewhat similar to the ways that homosexuality and handedness were treated (for just two examples of many) for a very long time. Autism and ADHD have components that can be very challenging, absolutely. An argument can be made that “neurotypical” makeups also have problematic elements. They’re differences, not diseases.

Sometimes those differences are minor, but significant.

In this case, getting up in the morning.

It’s fairly common for those with ADHD to be night owls. But with a prevalence estimated anywhere from nearly 15% to as low as 4% (and varying by gender), if you fail to separate out this confounding variable, your study results are trash and not generalizable.

Because the researchers did not distinguish between neurotypes, this study claiming that “everyone” is happiest in the mornings is equivalent to a 1950’s researcher saying that “children are happiest with right-handed writing materials.” Sure, that’s true for right-handed people, but for the 10%-12% of lefties, that’s absolutely wrong.

Burying The Most Important Part

Likewise, this study which claims that oral contraceptives protect against ovarian cancer does not separate out between the types of oral hormonal birth control. While I’ve never taken any, I know lots of women who have shared with me that different brands and formulations have very different effects on their bodies. Does that affect the results of this study? We cannot know because the researchers didn’t bother to check.

More concerningly to me is how the reporting buried the most stunning and useful bit of incidental findings I’ve seen in a long time: “We also identified blood biomarkers associated with ovarian cancer years before diagnoses, warranting further investigation.”

THEY IDENTIFIED BIOMARKERS THAT COULD LEAD TO A BLOOD TEST FOR OVARIAN CANCER. That’s huge! I’d say they should get a NIH grant, except… well.

That SOUNDS Like A Lot…

Speaking of the current political climate, that brings us finally to context. With the anti-trans bigots pumping their hatred into the public discourse, you’ve probably heard mention of transgender people who have “decision regret” after receiving gender-affirming care. This does happen, though it’s pretty rare. Measuring that rate is difficult, as different researchers used different metrics for what “counted” as “regret” (and after what kinds of procedures), leading to estimates ranging somewhere between 1% and 8%.

This figure has been weaponized by politicians and political opportunists (looking at you, Chloe Cole) as a reason to halt gender-affirming care.

Before you wring your hands too much about it or think they’re making a good point, it’s worth noting that studies of women who were treated for early breast cancer found rates of decision regret about their treatment as high as 69%.

The researchers — as reported by Oncology Nurse Advisor — found that “decision regret was influenced by multiple factors, including patient demographics, decision-making processes, and mental health, necessitating targeted interventions to mitigate its impact.”

If you’re wondering why that statistic isn’t getting the same kind of press, or why the suggested solution isn’t more and better support for transgender people like it is for women being treated for breast cancer, or why a political party isn’t making it a giant talking point,the answer is simple.

It’s bigotry, my friends.

What’s The Point?

The point?

For those doing the research: Do better with your research questions and conclusions. Communicate your findings clearly — including the nuance — to reporters, don’t trust them to understand.

For the rest of us: Think about what you read. Dig a little further, even if it seems to support your point of view. While reporters will misunderstand and (unintentionally) misrepresent findings, while politicians will deliberately lie, there is truth out there.

But it’s rarely as simple as a soundbite.