We are at the front of an AI-powered storm of website spam, monetized by advertisements and the darkest of SEO optimization. While I focus on those scraping news websites for this proof-of-concept, this problem will affect every sector of industry.

Worse, neither domain-blocking or AI itself will be sufficient to deal with the problem.

I’ve written up several examples previously — both on the blog and on Mastodon. Despite the growing number (and relative sophistication) of such sites, it’s been relatively difficult to convince others that this is something to take seriously.

So to prove the concept, I made one myself.

It took less than 90 minutes and cost me a dime.

What To Trust On The Internet

Concerns about the validity of information on the internet have existed… well, before the internet, at least as we know it today. Back in the days of Usenet and dial-up BBSes users (including myself!) would distribute text files of all sorts of information that was otherwise difficult to find.

At first, it was often easy to determine what websites were reputable or not. Sometimes simply the domain (Geocities, anyone?) would cause you to examine what the website said more closely.

As the the web has matured, more and more tools have been created to be able to create a professional-looking website fairly quickly. WordPress, Squarespace, and many, many more solutions are out there to be able to create something that is of professional quality in hours.

With the rise of containerization and automation tools like Ansible, once originally configured, deploying a new website can literally be a matter of a few minutes — including plug-ins for showing ads and cross-site linking to increase listings in search rankings.

But even with all that help, you still had to make something to put in that website.

That’s a trickier proposition; as millions of abandoned blogs and websites attest, consistently creating content is hard.

But now even that is trivial.

Starting from scratch — no research ahead of time! — I figured out how to automate scraping the content off of websites, feed it into ChatGPT, and then post it to a (reasonably) professional looking website that I set up from scratch in less than 90 minutes.

It took longer to write this post than it did to set everything up.

And — with the exception of the program used to query ChatGPT — every program I used is over two decades old, and every last one of them can be automated on the command line. {1}

The Steps I Took

The most complicated part was writing a bash script to iterate over everything. Here’s what I did:

NOTE : I have left out a few bits and stumbling blocks on purpose. The point is to show how easy this was, not to write a complete "how to." Also, I’m sure there are ways to do this more efficiently. This was intended solely as a proof of concept.

-

Install elinks, grep, curl, wget, and sed. This is trivial on linux-like systems, and not too difficult on OSX or Windows.

-

Get a ChatGPT API key. I spent a grand total of $0.07 doing all the testing for this article.

-

Install the cross-platform program mods (which is actually quite cleverly and well done, kudos to the authors).

-

Find the site that you want to scrape. Download their front page with

elinks URL --dump > outfile.txt -

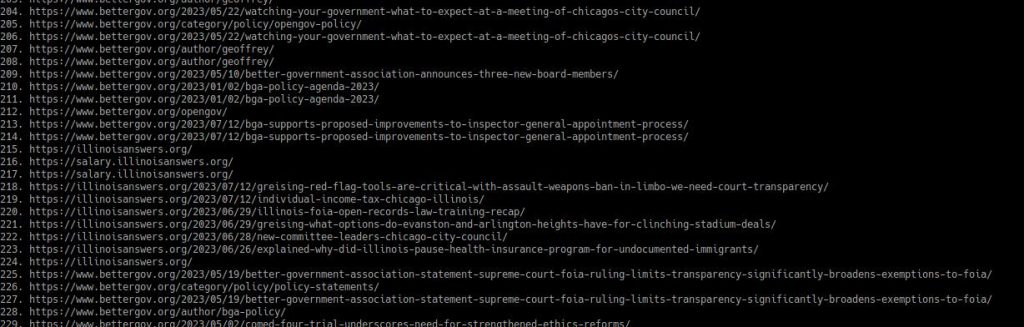

Examine the end of that file, which has a list of URLs. Practically every site with regularly posted content (like news) will have a pretty simple structure to their URLs due to the CMS (software to manage the site) often being the same.

For example, over 200 news sites use WordPress’ "Newspack" product. One of those is the Better Government Association of Chicago. Every URL on that website which leads to an article has the formhttps://www.bettergov.org/YEAR/MONTH/DAY/TITLE_OF_ARTICLE/.

-

Use something like this to get the links you want:

grep -e ". https://bettergov.org/2023" outfile.txt | awk -F " " '{print $2}' | sort | uniq > list_of_urls.txt.

Grep searches for that URL pattern in the file, awk cuts off the number at the beginning of the line, sort… well, sorts them, and uniq ensures there are no duplicates. -

Download the HTML of each of those pages to a separate directory:

wget -i ./list_of_urls.txt --trust-server-names -P /directory/to/put/files -

Create a script to loop over each file in that directory.

-

For each file in that directory do

mods "reword this text" < infile -

Format that output slightly using WordPress shortcodes so that you can post-by-email.

All of this is "do once" work. It will continue to run automatically with no further human input.

You can see the output (using one of my own posts) at https://toppolitics9.wordpress.com/2023/07/22/chatgpt-reworked-this/. If I was going to actually do something like this, I’d setup WordPress with another hosting company so that I could use add-ons to incorporate featured images and — most importantly — host ads.

Simply Blocking Domains Will Not Work

A key element here is that once you’re at the "sending the email" step, you can just send that post to as many WordPress sites as you can set up.

Spam — because that’s what this is — is about volume, not quality. It does not matter that the reworded news articles now have factual errors. It does not matter that a large percentage of people wouldn’t look at a website titled "Top Politics News" — as long as some did.

The ten cents I spent testing — now that I’ve figured out how to chain things together — could have been used to reproduce most of the articles featured on CNN’s front page and pushed out to innumerable websites, though who knows how many errors would have been created in the process.

Just as simply blocking e-mail addresses is only a partial solution to e-mail spam, domain blocking will only have limited effectiveness against this tactic. Because of the rewording, it is difficult to prove a copyright claim, or to take the website owners to court (assuming they can even be found). Because the goal is not to provide accurate information, taking a site down and setting it up again under a different domain is no big deal.

This Is About Every Industry

I’ve focused here on spam websites that scrape news websites because of my "day job", but this will impact every industry in some form. Because the goal is to gain pageviews and ad impressions (instead of deliberate misinformation), no sector of the market will be unaffected.

Anything you search for online will be affected.

Finding medical advice. How to do various home repairs. Information about nutrition and allergies about food in grocery stores and restaurants. Shopping sites offering (non-working) copies of the current "cool" thing to buy. Birding news. Recipes.

All easily scraped, altered, and posted online. {2}

Literally anything that people are searching for — which is not difficult to find out — can, and will, have to deal with this kind of spam and the incorrect information it spreads.

A Problem AI Made And Cannot Fix

And we cannot expect the technology that created the problem to fix it, either.

I took the article of mine that ChatGPT reworded, and fed it back to the AI. I asked ChatGPT, "Was this article written by an AI?"

ChatGPT provided a quick reply about the article it had reworded only minutes before.

"As an AI language model, I can confirm that this article was not written by an AI. It was likely written by a human author, sharing their personal experience and opinions on the topic of misleading statistics."

{1} For the fellow nerds: Elinks was created in 2001, curl and wget in 1996, bash in 1988, sed in 1974, uniq and grep in 1973, and sort in 1971.

{2} There is often some kind of disclaimer on the examples I’ve personally seen, hidden somewhere on the website, saying they make no guarantees about the truth of the information on thier site. As if people actually look at those pages.

Featured Image (because I have a sense of irony) based off an AI creation from NightCafe.